Advanced on page SEO made simple.

Powered by POP Rank Engine™

Includes AI Writer

7-day refund guarantee

Search engine optimization (SEO) is a vast field, with multiple technical and strategic elements affecting rankings. One such crucial yet often overlooked aspect is crawl budget—a factor that determines how often and how many pages of a website Googlebot (or other search engine crawlers) will crawl.

For large websites, e-commerce platforms, and news portals, crawl budget optimization plays a key role in ensuring that important pages get indexed quickly, leading to better search visibility.

This guide will explain what crawl budget is, how it works, why it matters, and how to optimize it for better SEO performance.

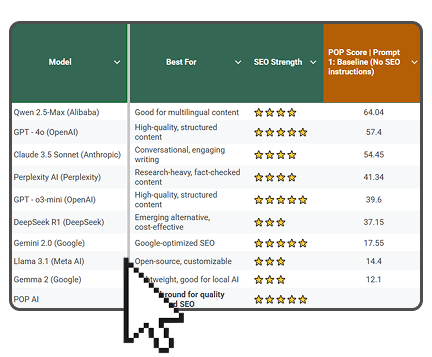

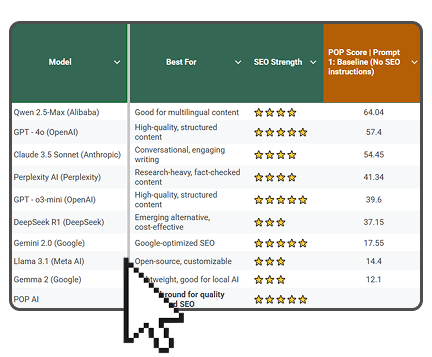

Which is the best LLM for SEO content?

Get the full rankings & analysis from our study of the 10 best LLM for SEO Content Writing in 2026 FREE!

- Get the complete Gsheet report from our study

- Includes ChatGPT, Gemini, DeepSeek, Claude, Perplexity, Llama & more

- Includes ratings for all on-page SEO factors

- See how the LLM you use stacks up

What is Crawl Budget?

Crawl budget is the number of URLs a search engine bot (Googlebot, Bingbot, etc.) crawls on a website within a given timeframe. It consists of two main components:

- Crawl Rate Limit – The maximum number of requests a search engine makes to a site without overloading the server.

- Crawl Demand – The priority Google assigns to crawling certain URLs based on their importance, freshness, and popularity.

In simple terms, Googlebot won’t crawl every single page of a website instantly. Instead, it follows a structured process based on website quality, internal linking, and crawl efficiency.

Understanding crawl budget requires recognizing that search engines allocate finite resources to each website. This allocation depends on various factors including site authority, server response times, content quality, and historical crawl patterns. The crawl rate represents the technical limitation - how fast bots can request pages without overwhelming your server infrastructure.

Crawl demand reflects the search engine's assessment of your content's value and urgency. Fresh, high-quality content that users frequently engage with receives higher crawl demand, while stale or low-value pages may be crawled less frequently. This dynamic system ensures search engines efficiently allocate their crawling resources across millions of websites.

Why is Crawl Budget Important for SEO?

Crawl budget optimization is especially crucial for:

- Large websites – Sites with thousands or millions of pages (e.g., e-commerce, directories, news portals).

- Frequent content updates – Blogs or news sites that need fresh pages indexed quickly.

- New websites – To ensure search engines discover and index all important pages.

- Sites with many low-value pages – If a website has many unimportant URLs (e.g., faceted navigation, duplicate pages), Google may waste crawl budget on them instead of high-priority pages.

If your site is not being crawled effectively, important pages might not appear in search results, impacting traffic and rankings.

The relationship between crawl budget and SEO performance becomes particularly evident when analyzing large-scale websites. E-commerce sites with thousands of product pages, news portals publishing multiple articles daily, and directory sites with extensive categorization all face unique crawl budget challenges. These sites must strategically guide search engines toward their most valuable content.

Understanding crawl activity patterns helps identify opportunities for optimization. Sites experiencing rapid growth, seasonal content changes, or frequent product updates need proactive crawl budget management to ensure new content gets discovered promptly. Without proper optimization, valuable pages may remain undiscovered for weeks or months, significantly impacting potential organic traffic.

Understanding Crawl Limit and Crawl Rate Factors

The crawl limit represents the maximum frequency at which search engines can request pages from your website without causing server performance issues. This limit depends on several technical factors including server response times, hosting infrastructure quality, and website architecture complexity.

Search engines continuously monitor server response patterns to determine appropriate crawl rates. Websites with fast, reliable servers typically receive higher crawl limits, while sites with slow response times or frequent errors may experience reduced crawl activity. This creates a direct correlation between technical performance and crawl budget allocation.

Budget optimization strategies must account for both crawl rate limitations and crawl demand factors. Improving server performance, optimizing database queries, and implementing efficient caching mechanisms can increase your crawl limit, allowing search engines to discover and index content more frequently.

Which is the best LLM for SEO content?

Get the full rankings & analysis from our study of the 10 best LLM for SEO Content Writing in 2026 FREE!

- Get the complete Gsheet report from our study

- Includes ChatGPT, Gemini, DeepSeek, Claude, Perplexity, Llama & more

- Includes ratings for all on-page SEO factors

- See how the LLM you use stacks up

How to Check Your Crawl Budget?

Before optimizing, it's essential to analyze how Google is crawling your website. Here’s how:

Google Search Console (GSC) → Crawl Stats

- Go to Google Search Console

- Navigate to Settings → Crawl Stats

- Check crawl requests, response times, and crawl frequency

Server Log Files

- Analyze server logs to see how frequently search engine bots crawl different pages.

- Identify crawl inefficiencies, errors, and wasted crawl budget.

Screaming Frog or Site Audit Tools

- Use tools like Screaming Frog SEO Spider, Ahrefs, or Semrush to detect crawlable and indexable pages.

Analyzing Crawl Requests and Budget Allocation Patterns

Effective crawl budget analysis requires examining historical crawl requests data to identify patterns and inefficiencies. Google Search Console provides detailed insights into daily crawl activity, allowing you to understand how search engines interact with your website over time.

Crawl requests analysis reveals which pages receive the most attention from search engine bots and which areas of your site may be neglected. This information helps prioritize optimization efforts and identify potential crawl budget waste on low-value pages.

Understanding seasonal crawl patterns, response time variations, and error frequency helps develop comprehensive budget optimization strategies. Sites with inconsistent crawl activity may need technical improvements or architectural changes to achieve more predictable search engine engagement.

How to Optimize Crawl Budget for SEO?

Now that we understand crawl budget, let’s explore 10 effective strategies to optimize it.

1. Prioritize Indexing of Important Pages

- Ensure that only valuable pages are indexed (e.g., landing pages, service pages, blog posts).

- Use Google Search Console’s "Index Coverage Report" to check which pages are indexed.

- Set low-priority pages to “noindex” if they don’t need to appear in search results.

2. Optimize Site Architecture for Better Crawling

A well-structured website improves crawlability and indexability.

- Use a flat website structure (avoid deep URLs like /category/subcategory/page).

- Ensure important pages are no more than 3 clicks away from the homepage.

- Improve internal linking to guide search engines to important pages.

3. Improve Internal Linking

Google follows links to discover new content. Optimize your internal links by:

- Linking to high-priority pages from key navigation areas.

- Avoiding orphan pages (pages with no internal links).

- Using descriptive anchor text (e.g., "Best SEO Tools" instead of "Click here").

4. Block Crawling of Unnecessary Pages in Robots.txt

Some pages should not be crawled at all, such as:

- Admin or login pages

- Duplicate pages (e.g., tag pages, search results pages)

- Faceted navigation (filters generating multiple URLs)

Use the robots.txt file to block unwanted URLs from being crawled. Example:

txt

CopyEdit

User-agent: Googlebot

Disallow: /wp-admin/

Disallow: /search-results/

Disallow: /filter/

⚠️ Note: Blocking a page in robots.txt doesn’t mean it won’t be indexed. Use "noindex" meta tag for that.

5. Fix Broken Links (404 Errors)

Broken pages waste crawl budget.

- Use Google Search Console to find and fix 404 pages.

- Redirect important broken links using 301 redirects.

6. Avoid Duplicate Content Issues

Duplicate content confuses search engines and wastes crawl budget.

- Use canonical tags (rel="canonical") to indicate preferred versions of pages.

- Avoid unnecessary URL variations (e.g., HTTP vs. HTTPS, www vs. non-www).

7. Optimize XML Sitemap

An XML sitemap guides Googlebot to essential pages.

- Keep only high-quality URLs in the sitemap.

- Avoid duplicate, redirected, or “noindex” URLs in the sitemap.

- Submit the sitemap in Google Search Console.

Example of an optimized sitemap:

xml

CopyEdit

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<url>

<loc>https://www.example.com/homepage</loc>

<lastmod>2024-03-04</lastmod>

<priority>1.0</priority>

</url>

</urlset>

8. Reduce Page Load Time (Improve Crawl Efficiency)

Slow websites reduce crawl budget because Googlebot waits longer for pages to load.

- Compress images, enable caching, and use a CDN.

- Minimize JavaScript and CSS blocking elements.

- Use Google PageSpeed Insights to check performance.

9. Use "Last-Modified" HTTP Headers

By specifying "Last-Modified" headers, Google only crawls updated pages instead of re-crawling everything.

- Ensure your web server sends Last-Modified dates in HTTP headers.

Example:

http

CopyEdit

Last-Modified: Mon, 04 Mar 2024 12:00:00 GMT

10. Submit Important URLs Manually for Faster Crawling

If a new page is not getting crawled, submit it manually:

- Go to Google Search Console → URL Inspection Tool

- Click "Request Indexing"

Advanced Crawl Budget Optimization Techniques

Beyond basic optimization strategies, advanced crawl budget management involves understanding complex interactions between technical SEO elements and search engine behavior. Implementing structured data markup can influence crawl demand by providing clear signals about content importance and relationships.

Monitoring crawl activity across different search engines reveals varying crawl patterns and preferences. While Google may prioritize certain content types, Bing crawlers might focus on different page characteristics. Understanding these differences helps develop comprehensive optimization strategies that maximize visibility across multiple search platforms.

Server-side optimization plays a crucial role in crawl budget efficiency. Implementing efficient database queries, optimizing server response times, and utilizing content delivery networks can significantly increase crawl limits. These technical improvements create a foundation for sustained crawl budget optimization.

Managing Crawl Budget for Different Content Types

Different content types require tailored crawl budget approaches. News sites with time-sensitive content need aggressive crawl optimization to ensure rapid indexing of breaking stories. E-commerce platforms must balance product page crawling with category navigation and user-generated content.

Blog sites benefit from strategic internal linking and content freshness signals that encourage regular crawl activity. Understanding how search engines evaluate content freshness, user engagement, and topical relevance helps optimize crawl demand for specific content categories.

Social media integration and external link building can influence crawl demand by demonstrating content popularity and authority. Pages that receive significant social engagement or high-quality backlinks often experience increased crawl frequency as search engines recognize their value.

Monitoring and Measuring Crawl Budget Performance

Effective crawl budget optimization requires continuous monitoring and measurement of key performance indicators. Tracking crawl frequency, indexing speed, and organic traffic growth provides insights into optimization effectiveness and areas requiring adjustment.

Establishing baseline metrics before implementing optimization strategies enables accurate performance assessment. Regular analysis of crawl stats, server logs, and search console data reveals trends and patterns that inform ongoing optimization efforts.

Setting up automated alerts for crawl anomalies, server errors, and indexing issues enables proactive crawl budget management. Early detection of problems prevents crawl budget waste and maintains optimal search engine engagement levels.

How Long Does It Take for Google to Crawl a Page?

There is no fixed timeline. Google crawls pages based on priority:

- New websites: Can take days to weeks for the first crawl.

- Established sites: High-priority pages can be crawled within hours or days.

- Low-priority pages: May take weeks or months to be re-crawled.

Factors influencing crawl speed include site authority, internal linking, and content freshness.

Understanding crawl timing expectations helps set realistic goals for content publication and optimization strategies. High-authority sites with strong crawl budgets can expect faster discovery and indexing of new content, while newer sites may need patience and strategic optimization to achieve similar results.

Content freshness signals, including publication dates, update timestamps, and social engagement metrics, influence crawl timing. Search engines prioritize recently updated, relevant content that demonstrates ongoing value to users. Implementing these signals strategically can accelerate crawl frequency for important pages.

Crawl Budget Impact on Different Search Engines

While Google dominates search traffic, understanding crawl budget implications across different search engines provides comprehensive optimization benefits. Bing crawlers exhibit different behavior patterns and may prioritize alternative ranking factors compared to Google's algorithms.

International search engines like Baidu, Yandex, and regional platforms each have unique crawl budget characteristics and optimization requirements. Sites targeting global audiences must consider these variations when developing crawl budget strategies.

Search engine crawlers continue evolving their algorithms and crawl patterns based on user behavior, technological advances, and competitive pressures. Staying informed about crawler updates and algorithm changes ensures crawl budget optimization remains effective over time.

Final Thoughts: Crawl Budget Optimization for Better SEO

Crawl budget plays a vital role in SEO, especially for large and complex websites. If Googlebot spends too much time on unimportant pages, your key pages might not get crawled or indexed.

By implementing these strategies—optimizing site architecture, blocking unnecessary URLs, fixing broken links, improving load speed, and refining internal linking—you can ensure that Google crawls the right pages efficiently.

A well-optimized crawl budget leads to faster indexing, better search rankings, and increased organic traffic—all essential for SEO success.

Successful crawl budget optimization requires ongoing attention and regular refinement based on performance data and search engine algorithm updates. The investment in proper crawl budget management pays dividends through improved search visibility, faster content discovery, and enhanced overall SEO performance.

Remember that crawl budget optimization is not a one-time task but an ongoing process that evolves with your website's growth and changing search engine requirements. Regular monitoring, testing, and adjustment of crawl budget strategies ensure sustained SEO success and competitive advantage in search results.

.webp)