Advanced on page SEO made simple.

Powered by POP Rank Engine™

Includes AI Writer

7-day refund guarantee

In 2026, AI tools are the go-to for content writing. Whether you're drafting a blog post, building out landing pages, or updating product descriptions, it’s common practice nowadays to turn to a large language models (LLM) for support. And with how fast these tools have improved, it's no wonder.

Given how many people are using them, it makes sense to assume that these tools understand how to write content that ranks and appears in ai overviews. But do they really?

Which is the best LLM for SEO content?

Get the full rankings & analysis from our study of the 10 best LLM for SEO Content Writing in 2026 FREE!

- Get the complete Gsheet report from our study

- Includes ChatGPT, Gemini, DeepSeek, Claude, Perplexity, Llama & more

- Includes ratings for all on-page SEO factors

- See how the LLM you use stacks up

The Common Assumption About SEO and AI

Ask any AI to “write in an SEO friendly manner” or “add relevant keywords for SEO,” and it will quickly and confidently give you polished content. You can even ask it to “optimize headings and structure for search engines,” and it’ll give you something that looks solid on the surface.

But if you've ever published AI-generated content to your site and waited for traffic that never came, or noticed your content didn’t even get indexed, you're not alone. The reality is, those prompts don’t always produce the results we expect. The writing may sound clean, but it’s often missing what regular and ai search engines actually need in order to rank your content.

Writing Alone Isn’t Enough

Today’s LLMs are great at sounding human. In fact, they’ve become so good that it’s hard to tell the difference between AI writing and human writing. But SEO content writing is about more than just good grammar and clean sentences. It’s about structure, context, topic depth, and semantic signals that help search engines understand what your content is really about.

And that’s where many popular LLMs fall short. They can write, but they don’t always do the large language models seo.

We Put 10 Popular LLMs to the Test

We didn’t want to rely on guesses or assumptions about the quality of LLM-written content. So we ran a hands-on test. We compared 9 of the most popular LLMs used by SEO professionals and marketers today. These are all real-world models people use for writing every day.

The 10th tool is a surprise I’ll reveal shortly.

Our goal? To find out which language models actually delivers the best performance when it comes to creating content that both reads well and ranks well. We measured each one on SEO strength, content quality, and how well they responded to optimization prompts.

The findings might surprise you. If you’ve been struggling to get your AI content indexed or ranked, what we discovered could change the way you write.

How to Write Content for SEO in 2026?

Writing content that ranks today isn’t what it used to be. In 2026, creating content that performs well in search engines requires more than just “good” writing. Whether you're working with a human writer or using AI tools, there are two things you need to get right: quality and optimization.

1. Create High-Quality, Helpful Content

Google no longer rewards ‘fluff’. If your content doesn’t answer real questions or add clear value, it won’t rank, no matter how well it's formatted. The same goes for AI-generated content. It might look clean and polished, but if it lacks depth or misses key points, it won’t move the needle.

Today’s SEO content writing must:

- Be specific and relevant to the search intent

- Get straight to the point without unnecessary filler words

- Include sourced data and verified stats when possible

- Offer something unique, not just a rewording of what's already out there

Thanks to advances in LLMs since 2023, AI writing has improved significantly. The content can pass AI detection tools and reads well to most people. But there’s still a big question: Do AI models actually know how to optimize content for LLM SEO?

2. Write for Search Engines and Large Language Models

It’s still true. If your content isn’t indexed, it won’t be found. That’s why writing for search engines is just as important as writing for people. You can’t just hope your LLM gets it right. You need to guide it with structure, formatting, and signals that help search engines crawl and understand the page.

That’s exactly what we tested at PageOptimizer Pro.

We used RankEngine, the engine behind our advanced SEO analysis tool, POP, to score content written by 10 popular AI models (including our own). Each model was tested on what we consider to be the most important SEO metrics to see which one produced the best overall content for ranking.

What We Measured (And Why It Matters)

- POP Score

Think of this like a report card. It scores how well the content follows SEO best practices. Most content needs to hit at least 80% to start seeing meaningful movement in Google rankings.

- Word Count

Longer content can help, but only if it stays useful. We checked if each LLM could follow basic instructions like writing 1,000 words and if that content had enough depth to cover the topic properly.

- Readability Score

Good SEO content needs to be easy to read. We measured:

- Reading Ease Score: Higher scores = simpler content

- Reading Level: Ideally at a 7th grade level, which is best for the average web reader

- Structural SEO Elements

We also analyzed how well each LLM handled common SEO structure:

- Title Tags: Did the model include 2–5 strong keywords and helpful variations?

- Headlines: Were the titles clear and relevant to the topic?

- Subheadings: Did the content break down into sections using 10–18 important topic terms?

- Section Lengths: Did each part of the article include enough content (167–278 words) to hold its own weight?

- Google NLP Terms: Did the content use enough semantic keywords (14–60 words) to help search engines understand the topic?

Not All AI Models Are Built for LLM SEO

Some Large Language Models can write smooth, human-like paragraphs. Others are better at structure or length. But only a few come close to hitting all the technical SEO targets. And even fewer can do it consistently.

That’s what made this comparison interesting. We didn’t just ask, “Which AI can write well?” We asked, “Which one creates content that actually helps you rank?”

The results? You'll see which AI tools delivered and which ones missed the mark. All backed by real data and scoring from our tool, backed by over 400 SEO tests, POP.

“What you will learn from this study is how well 9 of the top AI models did when it came to optimizing content for search engines and humans vs our own tool, POP. And the results will surprise you.”

Which is the best LLM for SEO content?

Get the full rankings & analysis from our study of the 10 best LLM for SEO Content Writing in 2026 FREE!

- Get the complete Gsheet report from our study

- Includes ChatGPT, Gemini, DeepSeek, Claude, Perplexity, Llama & more

- Includes ratings for all on-page SEO factors

- See how the LLM you use stacks up

Which LLMs Did We Put Head-to-Head in this test?

To find out which AI tools actually produce the best content for SEO, we ran a real-world research using 10 of the most popular LLMs in 2026. These are the AI models that SEOs, content writers, and digital marketers are using every day to scale up writing tasks.

How do they compare when it comes to SEO content writing? We tested each llm behavior using the same prompts and scored them on how well they followed SEO best practices, including structure, readability, and keyword integration. Some tools excelled at writing clean copy. Others struggled to create content that search engines could understand or index.

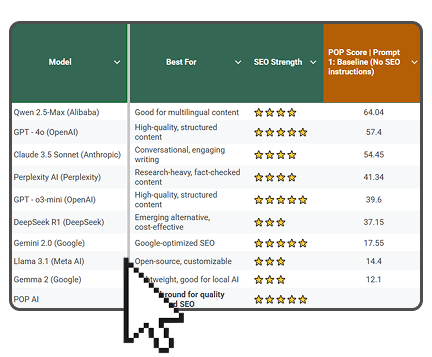

Here’s a quick overview of the models we tested:

These AI models were all given the same tasks — one prompt with no SEO guidance, and one with optimization instructions. Some delivered strong results on their own. Others only improved when prompted with SEO guidance. And one stood out above the rest.

How the LLM SEO Test Was Setup

To figure out which LLM actually produces the best SEO content writing, we needed a fair and consistent way to compare them. So we tested each AI model on two different prompts and scored their outputs across several important SEO parameters. The goal was to see not only how well they write, but whether they understand how to create content that gets indexed by search engines.

What We Tested (And Why)

Each LLM was given the same topic to write about. First, we asked the AI tools to generate a basic article with no SEO help. Then we asked them to write the same article again, but this time with a clear SEO prompt. Both versions were analyzed using PageOptimizer Pro’s RankEngine to see how each model handled structure, keywords, readability, and on-page SEO.

This gave us two views for every AI model:

- How well does it write content out of the box?

- Can it actually optimize when asked to?

Part 1: Baseline Prompt (No SEO Instructions)

The first step was simple. We wanted to see how each LLM would respond without any hints about SEO. This showed us their natural ability to generate readable, structured content on a general topic.

Prompt: "Write an article about what to see in Rome in 1000 words. Output html format."

We picked this topic because it’s broad, familiar, and competitive. The kind of topic that millions of people search for and that websites actively try to rank for. The goal here was to test the raw writing strength of the AI tools and how well they handle:

- Word count and topic coverage

- Basic formatting like headings and paragraphs

- Natural use of topic-relevant terms

Part 2: SEO-Optimized Prompt – Do These Models Actually Understand SEO?

For the second part of the test, we wanted to see if LLMs actually “get” SEO when you ask them to. This is where we introduced an SEO-focused instruction and looked closely at how the content changed.

Prompt: "Write an SEO-optimized article about what to see in Rome in 1000 words. Output html format."

This told the models to apply their understanding of SEO. We were looking for clear signs that they could:

- Structure the content using proper headings and subheadings

- Use keywords and variations naturally

- Improve readability and engagement

- Write with search engines in mind, not just readers

This is the kind of prompt many marketers use when trying to create optimized content with AI tools. But does simply adding the word “SEO” make a difference? Our test set out to find out.

What We Learned So Far About LLM SEO Services

Some LLMs made noticeable improvements with the SEO prompt. Others changed very little. A few even got worse by overstuffing keywords or cutting down the useful content. It became clear that not all AI models understand what SEO content writing really means.

Next, we’ll show you the exact criteria we used to score each output and how these AI models performed in side-by-side comparisons.

How We Scored the LLMs Against One Another (The Criteria)

To figure out which AI model truly delivers the best SEO content writing, we needed a consistent way to evaluate every piece of content. That’s where our scoring framework came in. We used PageOptimizer Pro’s RankEngine to measure how well each LLM handled both writing quality and SEO structure. Not just how the content read, but how well it would perform in search engines.

Each AI model was scored across 5 core SEO categories that play a major role in getting content indexed and ranked.

1. POP Score: Overall SEO Optimization

The POP Score is a comprehensive measure of how well the content follows SEO best practices. It’s built from over 100 real SEO factors, including structure, on-page elements, and content signals that search engines look for. Most pages start to rank better when they hit a POP Score of 80% or more.

This score is the clearest indicator of whether an AI tool can generate optimized content that actually works, not just sounds good.

2. Word Count: Can the Language Models Hit the Target?

While longer isn’t always better, SEO content still needs to be thorough. We asked every AI model to write 1,000 words. We then checked how closely they followed that instruction and whether the length added real value.

Some AI tools stopped short. Others added fluff to hit the number. We looked for LLMs that could write with purpose and stay focused while meeting or exceeding the content length.

3. Readability Metrics: Is the Content Easy to Read?

Search engines still reward clarity. We measured two core readability scores:

- Reading Ease Score: Higher is better. Content that’s clear and simple wins. Both for users and search engines.

- Reading Level: The best writing for the web sits around a 7th grade level. This helps make content accessible and more likely to perform in broader search results.

LLMs that wrote overly complex or academic-sounding content missed the mark here.

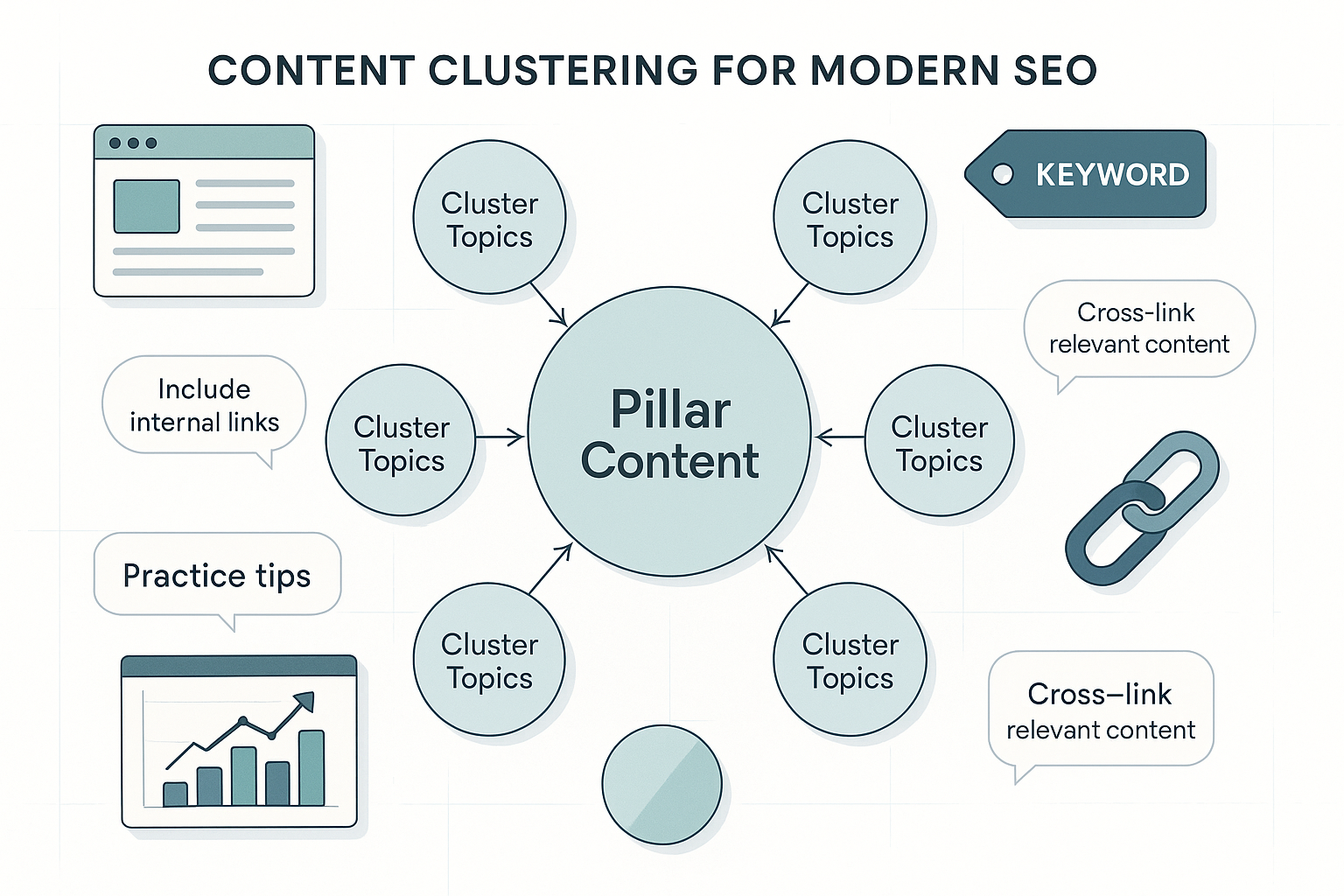

4. Variations & LSI Terms: Strong Use of Related Keywords

Search engines look at more than just one keyword. They analyze the full context of your content. That’s where Latent Semantic Indexing (LSI) and keyword variations come in.

We scored each model on how well it used:

- Keyword variations in headings, subheadings, and body text

- Related topic terms throughout the article

- Structure that supports these terms naturally

LLMs that nailed this created content with strong relevance and better alignment with how Google understands search intent.

5. Google NLP Terms: Semantic Signals That Matter

Google uses natural language processing (NLP) to analyze how well content matches search queries. One of the best signals is how many Google NLP terms (semantic keywords) show up in the main content area.

For our test, we looked at whether the AI tools could naturally include 14 to 60 important semantic terms related to the topic. This helped us see which LLMs were just writing to write, and which were actually writing for search.

What This Scoring Revealed

By combining these five categories, we could see exactly where each LLM stood out in their responses, and where they fell short. Some models produced clean writing but ignored structure. Others nailed the formatting but missed the semantic signals needed for ranking.

The result? A detailed, side-by-side look at which popular AI tools are really up to the task of SEO content writing, and which one gives you the best shot at getting indexed and ranked.

That analysis is turned into a clear POP Score. The higher the score, the more likely the page is to get indexed and climb in search results.

The Results: SEO Optimized Prompt

Do These Models Actually Understand SEO?

For this test, we kept it simple—we just told the LLMs to give us an 'SEO-optimized' article. No deep guidance, no keyword lists, no extra instructions—just, 'Make it SEO-friendly.' The goal? To see if these models actually understand what SEO content means on their own.

Turns out, they don’t.

Instead of delivering well-optimized, keyword-rich content, most of these models reshuffled words, added fluff, and barely touched keyword strategy. While some scores improved, it was clear they were just formatting text differently, not optimizing for search rankings.

POP Score Analysis

The POP Score is the key measure of how well AI-generated content aligns with SEO best practices. A high POP Score means you’re creating content Google actually wants to rank. A low score? Good luck getting past page 5.

Here’s how different models performed with the SEO optimized prompt:

✅ Best in POP Score: POP AI (100). Most pages start to get good movement in Google when they hit a POP score of 80.

❌ Worst in POP Score: Gemini 2.0 (18.71)

Word Count

Getting the word count right is an important part of good SEO. The limitation with LLMs is that they cannot match their word count output to what article length Google is rewarding for that particular keyword. This is something only POP is able to do, hence why POP’s word count score was 50% higher than the other LLMs.

✅ Best in Word Count: POP AI (1581)

❌ Worst in Word Count: Gemini 2.0 (551)

Readability Metrics

Readability isn’t a ranking factor but most people want internet content to be around a 7th grade level so that their visitors can read and understand the content quickly.

✅ Best Readability: GPT-4o (most accessible model)

❌ Hardest to Read: Gemma 2 (Borderline unreadable. Complex language doesn’t mean quality, and in SEO, clarity wins.)

Variations & LSI Terms Analysis

Search Engine & Page Titles (Target: 2-5)

✅ SE & Page Title Winner: POP AI with 5 keywords in the title

❌ SE & Page Title Losers: Gemini 2.0 and Gemma 2 with only 1 keyword in the title each. This is a critical SEO failure since optimized titles are essential for better search relevance and click-through rates.

Sub-headings (Target: 10-18)

Headings aren’t just for readability - they help Google break down content for better indexing and ranking. Without enough important words in the subheadings, the content lacks clarity and scannability, making it harder for both users and search engines to understand the key sections of the page.

A well-optimized article should spread Latent Semantic Indexing (LSI) terms and keyword variations across subheadings and main content to maximize ranking potential.

✅ Sub-headings Winner: POP AI with 18 important terms and DeepSeek R1 with 10 important terms.

❌ Sub-headings Loser: Gemma 2 (0 LSI or variations words in sub-headings) - essentially no important terms in structure, meaning Google will struggle to index its content properly.

Main Content (Target: 167-278)

Only POP AI approached the ideal content depth per section. A well-optimized page needs enough text in each section to properly develop topics with supporting LSI terms, and improve ranking.

Gemma 2 performed the worst, meaning it lacked sufficient content in key areas, making it highly unlikely to rank well. If a section doesn’t provide enough supporting details, it fails to reinforce keyword relevance, weakening the page’s authority in Google's eyes.

✅ Main Content Winner: POP AI with 260 LSI/Variations words in main content

❌ Main Content Loser: Gemma 2 with only 10 LSI/Variations words - a massive SEO failure, making it nearly impossible to rank well.

Google NLP (Target: 14-60)

Google NLP scores measure how well an article aligns with Google’s understanding of a topic, ensuring that enough critical keywords and entities are present for strong ranking signals.

✅ Google NLP Winner: POP AI (with 60 NLP terms) - this model included the most relevant keywords, making it the most aligned with Google’s expected topic structure. GPT-o3 mini, Qwen 2.5-Max, DeepSeek R1 and Llama 3.1 also included the most essential terms Google expects, giving them a ranking advantage:

❌ Google NLP Loser: Gemma 2 (1 NLP term) - completely failing to include key ranking terms, making it nearly invisible to Google’s algorithms.

Why PageOptimizer Pro (POP)?

The numbers backed it up. While many AI tools produced decent content, only POP consistently delivered writing that was both high quality and optimized to perform in search engines. POP achieved the highest average SEO scores, hitting the benchmarks across structure, keyword use, readability, and semantic relevance.

Where Most LLMs Fell Short

Most of the LLMs in our test did an acceptable job when it came to formatting content and creating smooth paragraphs. When we prompted them to “optimize for SEO,” they made changes, mostly to the headline and subheadings. Some models even added more keywords.

But here’s where things fell apart:

- Main content depth dropped in many cases

- LSI terms were underused or missing completely

- Semantic coverage weakened, making the article less authoritative

- Google NLP terms dropped below the recommended 14–60 range

Even the best-known AI tools (including GPT-4o and Claude 3.5) struggled to balance SEO structure with meaningful substance in their responses. This meant the content might sound good but lacked what search engines look for when deciding what to rank and index.

SEO Prompt ≠ SEO Results

Adding “SEO-optimized” to your prompt doesn’t guarantee the content will actually be optimized. That’s one of the biggest takeaways from this study. These AI models don’t fully understand what SEO content writing involves, and they often misinterpret what’s needed for real search visibility.

In short, LLMs can write, but that doesn’t mean they can optimize.

Why POP Came Out on Top

POP is different. It’s more than just an AI writer. It’s a scientific SEO tool that uses data-backed recommendations to shape your content. Powered by RankEngineTM, POP scores your content against real competitors and tells you exactly what to fix or improve. That includes:

- Keyword targets and variations

- Ideal word counts

- Heading and subheading structure

- Google NLP term coverage

- On-page SEO elements that help your content get indexed

POP gives you the best of both worlds: quality writing from an integrated AI assistant and deep SEO analysis that helps your content actually rank.

If you're serious about using AI tools for SEO content writing, this test shows that relying on general-purpose models isn’t enough. The best results come when writing is guided by real optimization tools. And that’s exactly what POP was built for.

Baseline Prompt (No SEO Instructions) - Raw AI Output

POP Score Analysis

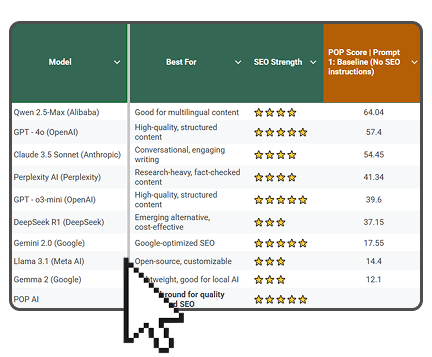

Here’s how different models performed with the baseline prompt:

✅ Best: Qwen 2.5-Max (64.04) - it gets SEO structure right. GPT-4o (57.4) - reasonable SEO alignment.Claude 3.5 Sonnet (54.45) - decent, but not great.

❌ Worst: Gemma 2 (12.1) - basically the AI equivalent of a brick for SEO.

Word Count Comparison

✅ On Target: Claude 3.5 Sonnet (1,023 words) - nailed the 1000-word request.

❌ Too Chatty: GPT-o3 mini (1,394 words) - clearly doesn't know when to stop talking.

❌Way Too Short: Gemma 2 (537 words) - SEO content needs meat, and this one was barely a snack.

Readability Metrics

✅ Easiest to Read: GPT-4o (55.09 Reading Ease Score) - If you want content that’s smooth and digestible, this is your model.

❌ Most Complex: Claude 3.5 Sonnet (35.06 Reading Ease Score) - Get ready to decode some dense AI text.

Variations & LSI Terms Analysis

Latent Semantic Indexing (LSI) terms and keyword variations are crucial for ranking because Google expects content to include related and supporting terms that enhance topic relevance. A well-optimized article should spread these terms across subheadings and main content to maximize ranking potential.

Search Engine & Page Titles (Target: 2-5 important words)

✅ SE & Page Title Winners: Most models generated optimized titles with 2 important terms, which is within an acceptable range.

❌ SE & Page Title Losers: Gemini 2.0, Llama 3.1, and Gemma 2 only generated titles with 1 important term, meaning they missed a critical SEO signal - properly structured titles help Google understand the page topic better and increase click-through rates. Missing an optimized title means your page may not clearly communicate relevance to Google, hurting ranking potential.

Sub-headings (Target: 10-18 LSI/Variations important words in Sub-headings)

All models failed to reach the ideal range, making their structure weak. Headings aren’t just for readability—they help Google break down content for better indexing and ranking. Without enough important words in the subheadings, the content lacks clarity and scannability, making it harder for both users and search engines to understand the key sections of the page.

❌❌ Qwen 2.5-Max (8) and DeepSeek R1 and Perplexity AI (6) performed the best, but even they didn’t hit the ideal range of 10-18 important words in the headings.

Main Content (Target: 167-278 LSI/Variations words in Sub-headings)

If a section doesn’t provide enough supporting details, it fails to reinforce keyword relevance, weakening the page’s authority in Google's eyes.

This is one of the most critical ranking factors - thin content won’t cut it. If a section doesn’t provide enough supporting details, it fails to reinforce keyword relevance, reducing the page’s chances of being seen as authoritative by search engines.

✅ Main Content Winners: Only GPT-o3-mini (53) and Qwen 2.5-Max (52) approached the ideal content depth per section. A well-optimized page needs enough text in each section to properly develop topics with supporting LSI terms, and improve ranking.

❌ Main Content Loser: Gemma 2 (14) performed the worst, meaning it lacked sufficient content in key areas, making it highly unlikely to rank well.