Advanced on page SEO made simple.

Powered by POP Rank Engine™

Includes AI Writer

7-day refund guarantee

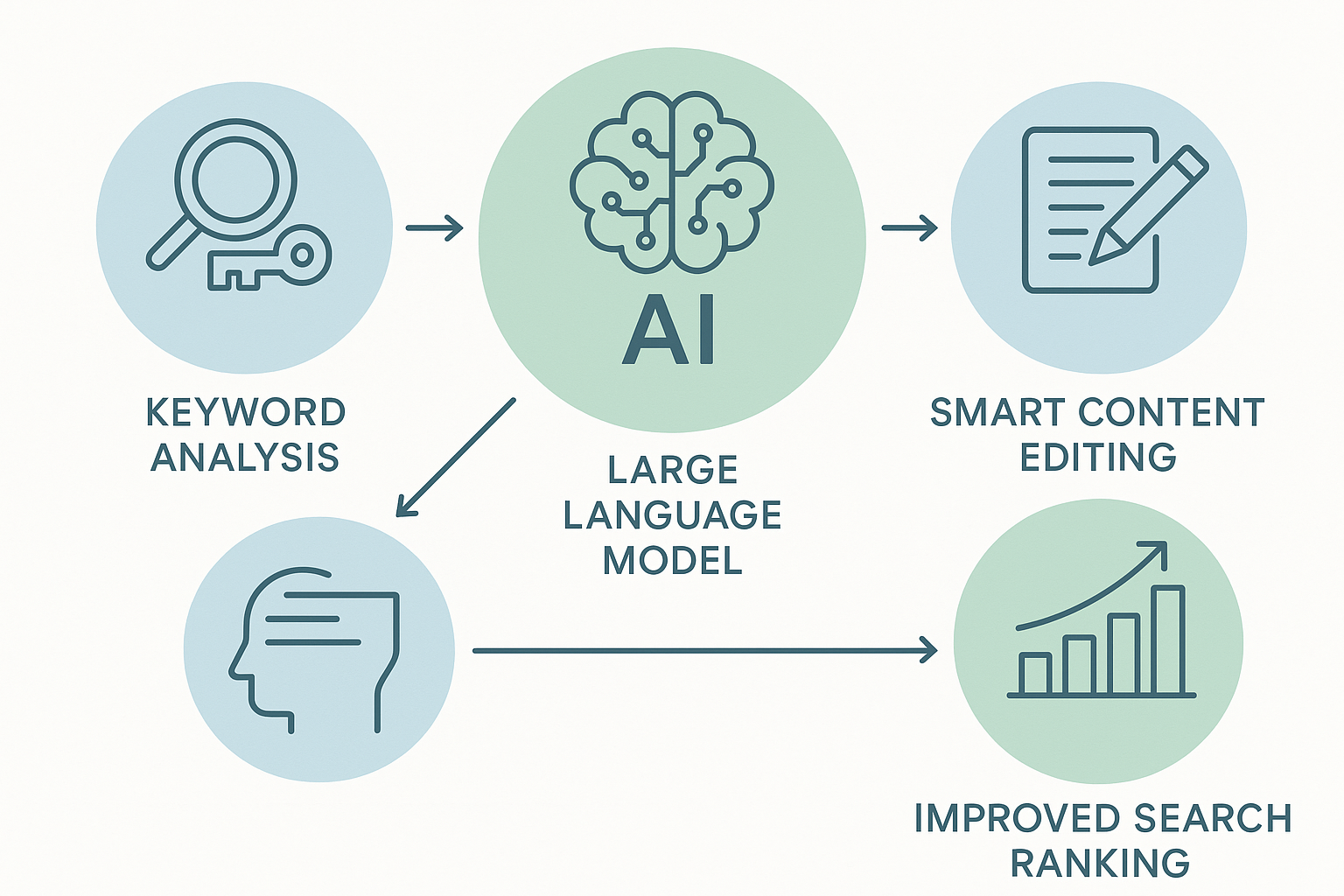

Large language models, often shortened to LLMs, are changing how SEO teams approach content creation and optimization. Instead of starting every page from a blank document, marketers can now generate structured drafts, rewrite underperforming sections, and test new angles in a fraction of the time. This shift has made it easier to keep pages aligned with how people search while maintaining consistency across large content libraries.

LLMs do not replace SEO strategy, testing, or competitive analysis. The strength of these systems comes from accelerating the writing and revision process so teams can spend more time validating pages against real ranking signals. When paired with a data-driven on-page tool like Page Optimizer Pro, large language model SEO becomes part of a workflow focused on measurable improvements rather than guesswork. Read on about how large language models help SEO.

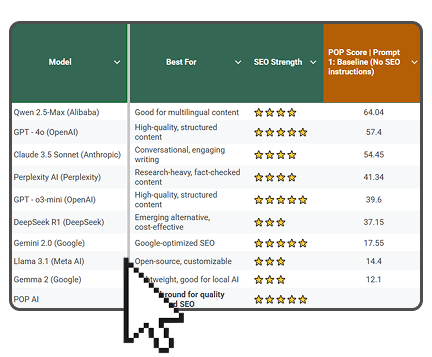

Which is the best LLM for SEO content?

Get the full rankings & analysis from our study of the 10 best LLM for SEO Content Writing in 2026 FREE!

- Get the complete Gsheet report from our study

- Includes ChatGPT, Gemini, DeepSeek, Claude, Perplexity, Llama & more

- Includes ratings for all on-page SEO factors

- See how the LLM you use stacks up

How LLMs Influence Modern SEO Workflows

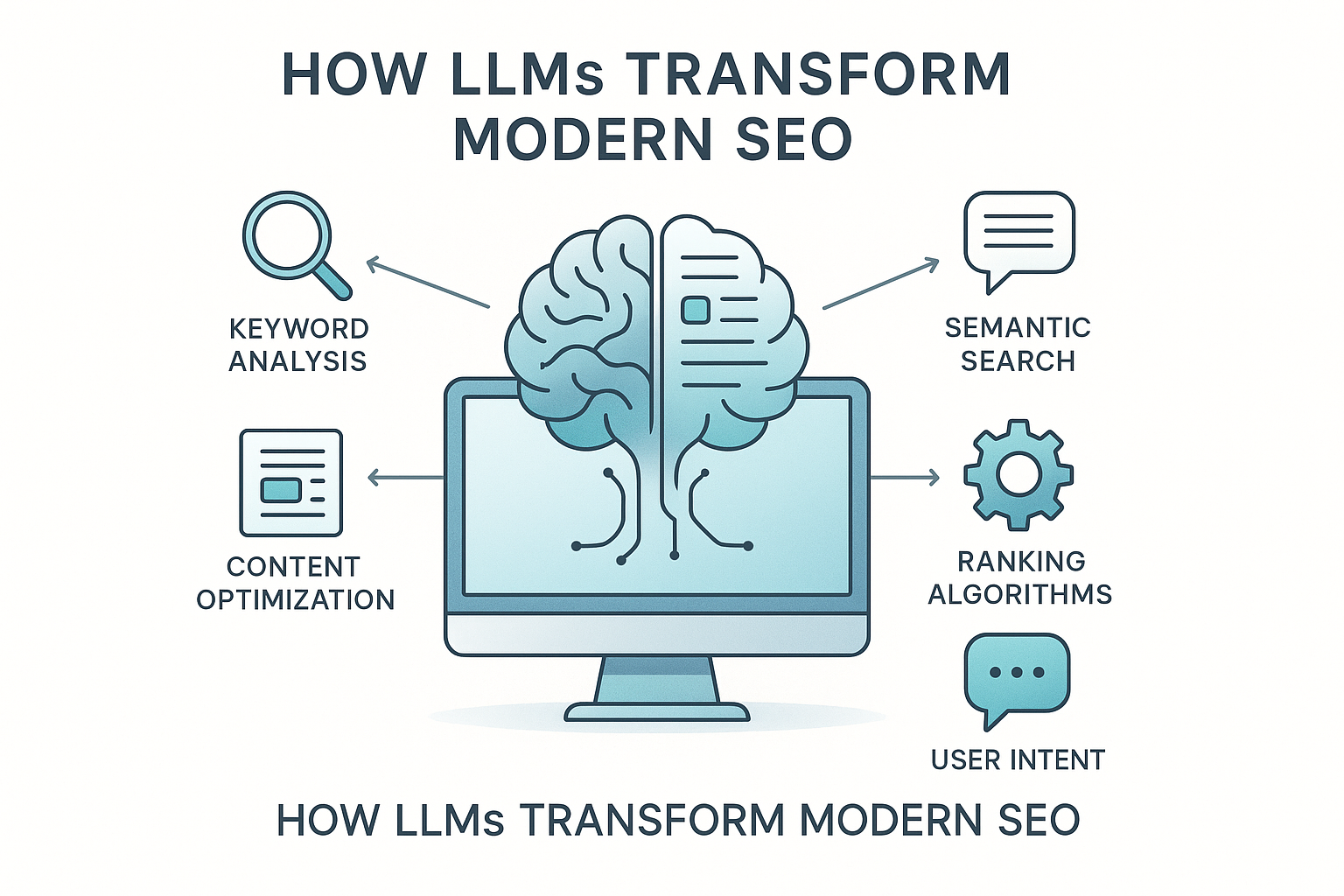

Search behavior has evolved. Queries are longer, intent is clearer, and users expect pages to answer related questions without forcing additional searches. Large language models (LLMs) have become important tools to help meet these expectations by modeling how language is used across top-ranking pages and reflecting that structure in new content.

One of the biggest changes is speed. SEO teams can move from idea to draft quickly, which makes testing easier. Instead of waiting weeks between updates, pages can be refined more often, allowing small improvements to compound over time. This faster iteration cycle is particularly valuable for affiliate-focused sites, where page-level adjustments and rapid testing often influence revenue outcomes. LLMs also help maintain tone and terminology across multiple writers, which is especially useful for SaaS brands with strict editorial standards.

Another benefit is clarity. LLMs can simplify dense explanations, improve transitions, and reorganize content so it is easier to scan. These improvements support both user experience and search performance, since pages that communicate clearly tend to perform better in competitive results. Over time, this leads to cleaner site-wide patterns and systems that make ongoing optimization easier to manage and scale across growing content libraries.

Large Language Model SEO: Where LLMs Add the Most Value for SEO Teams

LLMs are most effective when used for specific tasks rather than full automation. During the research phase, they can surface common subtopics, summarize patterns across ranking pages, and suggest angles that align with different stages of awareness. This helps teams plan pages that reflect what searchers expect to see and how topics are commonly framed in competitive results.

During writing and editing, LLMs support faster revisions. They can rewrite headings for clarity, expand thin sections, or tighten long paragraphs without changing the meaning. This makes them especially useful for content refreshes, where the goal is improvement rather than reinvention and where consistency across updates matters. For writers and content teams, this approach supports higher output while still providing a clear quality check before content goes live.

LLMs also assist with supporting SEO tasks that often slow teams down. Drafting internal link suggestions, proposing schema text, or outlining update notes becomes easier when language generation is handled quickly. For freelance SEOs, this kind of structured support helps deliver data-backed recommendations efficiently while simplifying client implementation and communication. All drafts should still be reviewed and validated to ensure accuracy and alignment with performance goals.

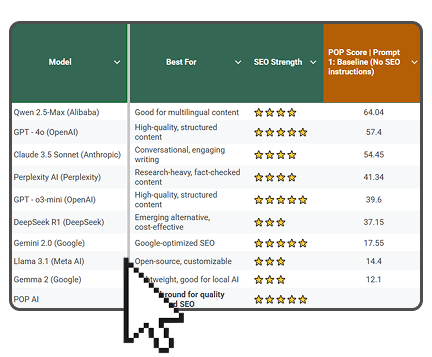

Which is the best LLM for SEO content?

Get the full rankings & analysis from our study of the 10 best LLM for SEO Content Writing in 2026 FREE!

- Get the complete Gsheet report from our study

- Includes ChatGPT, Gemini, DeepSeek, Claude, Perplexity, Llama & more

- Includes ratings for all on-page SEO factors

- See how the LLM you use stacks up

A Practical SEO Workflow Using LLMs and Page Optimizer Pro

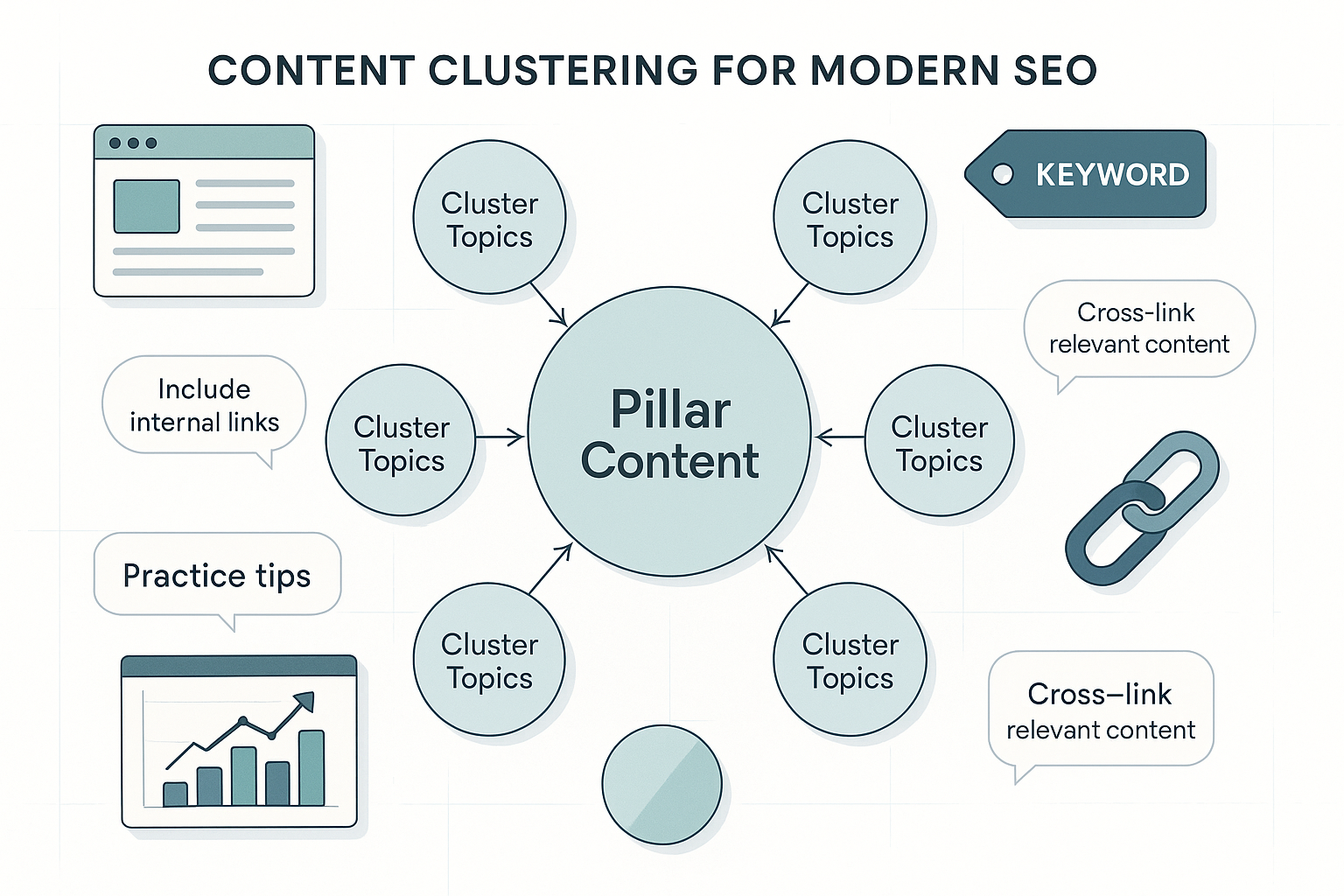

The most reliable results come from combining language generation with real on-page data. A practical workflow starts by defining a clear target keyword and intent. For a topic like how large language models help with favorable SEO results, the reader is usually looking for explanation, examples, and guidance they can apply within their own workflow.

A large language model can then be used to create an initial content outline and draft that reflects that intent. At this stage, the focus should be on structure and clarity rather than perfect content marketing optimization. This keeps the content readable and prevents early over-editing. Once the draft exists, it can be analyzed in Page Optimizer Pro’s Content Editor to compare it against top-ranking competitors. These recommendations are driven by the POP Rank Engine, developed through years of testing and hundreds of SEO experiments, and designed to analyze hundreds of on- page parameters. This allows optimization decisions to be based on observed ranking

behavior rather than assumptions, helping teams move from draft to refinement with greater confidence and speed.

This comparison highlights which terms, topics, and page elements appear most often in successful results. Instead of guessing what to add or remove, edits are guided by patterns already working in the search results. The content is then refined in a human first order, starting with structure, followed by readability, and finishing with natural term usage that fits the context.

Calls to action should be introduced only after the page has delivered value. For informational content, a CTA that invites readers to analyze their own page or test a tool feels aligned with intent and avoids interrupting the learning flow, while still providing a clear next step for motivated readers.

Measuring SEO Results and Keeping Quality High

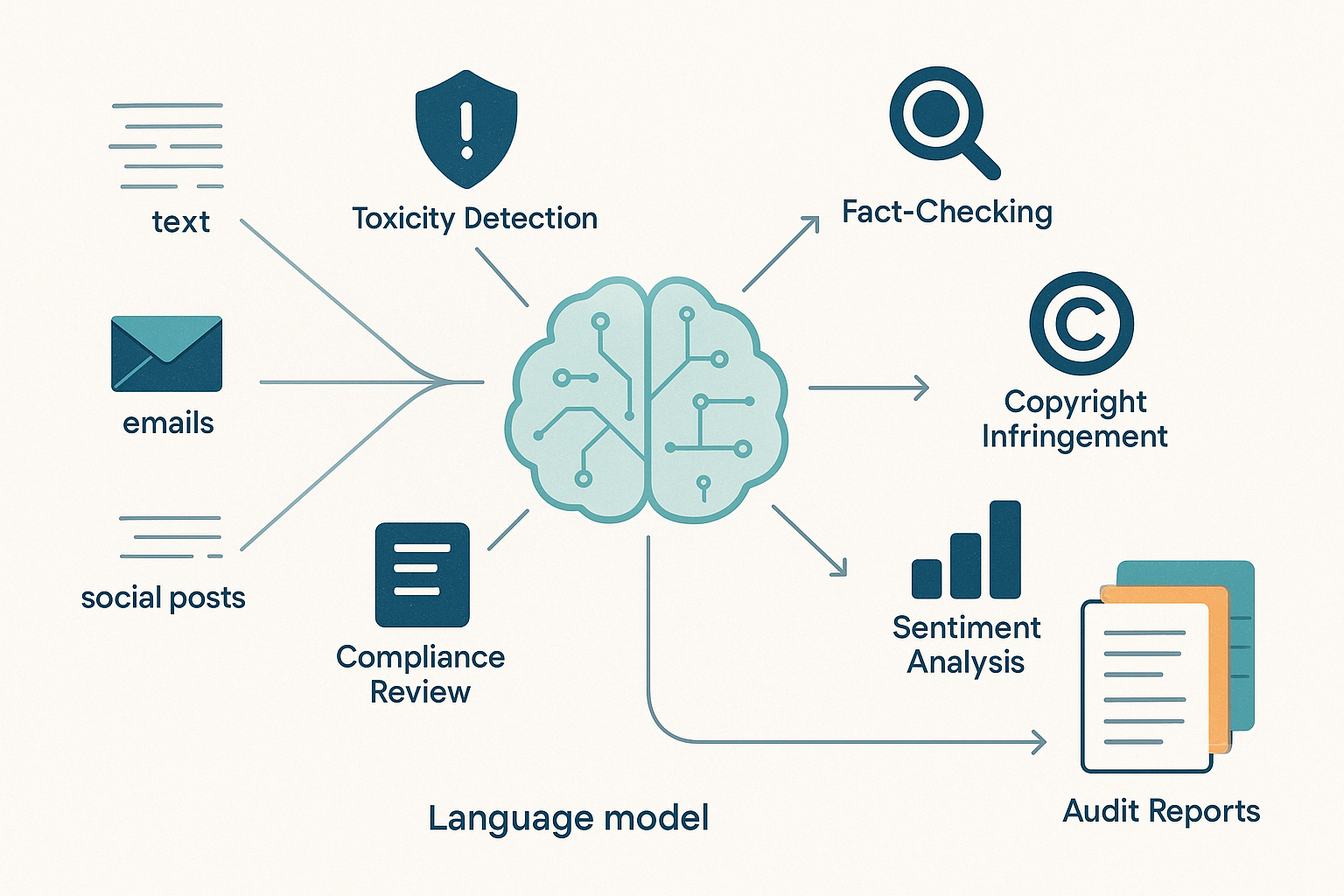

LLM-assisted content should be evaluated the same way as any other SEO work. Rankings, impressions, and clicks provide visibility signals, while engagement metrics help confirm whether the page meets user expectations. Comparing performance before and after updates makes it easier to tie improvements to specific changes. For in-house SEO teams, this structure supports ongoing monitoring of priority pages and helps track which updates influence performance over time.

Quality issues usually appear when teams skip validation. Publishing AI-generated text without reviewing accuracy or relevance can lead to vague claims and missed opportunities. Overusing suggested terms can also reduce readability, which is why edits should always prioritize clarity first and maintain a natural flow.

Another common issue is relying on LLMs to decide strategy. While they can suggest structure and phrasing, they cannot replace understanding of the audience or the competitive landscape. Strong results come from using LLMs as a support layer, not the decision-maker, with final judgment staying with the SEO team.

If you want a clearer path from draft to on-page improvements, Page Optimizer Pro helps align content updates with what is already working in the search results, so each revision has a measurable purpose. This approach is especially useful for agencies that need to validate optimization work for clients and demonstrate progress tied directly to search performance, supporting stronger reporting and longer-term engagements through their systems.

Applying Large Language Model-Driven SEO at Scale

As teams grow, consistency becomes harder to maintain. LLMs make it easier to apply the same standards across new pages [link to: “future of llms in seo”] and existing digital content. When combined with Page Optimizer Pro, teams can create repeatable systems: draft quickly, validate against competitors, refine with purpose, and measure outcomes.

This approach works especially well for content refreshes. Instead of rewriting entire

pages, teams can identify which sections need expansion or clarification and update only what matters. Over time, these focused updates help stabilize rankings and improve coverage without overwhelming writers or editors.

For marketing teams evaluating how to integrate LLM optimization and their SEO process and systems, the goal should be balance. Use automation to reduce friction, rely on data to guide decisions, and keep editorial judgment at the center. That combination supports sustainable growth while maintaining the quality users expect from Page Optimizer Pro content.